🤖 AI Embedding

Hackathon

This week I have been playing around with AI models from Anthropic and Vertex AI at GitLab to familiarize myself with this new technology.

I have learned a lot of new skills and discovered how powerful those recents models are.

Detecting duplicate content

We have been working on a small project in order to identify when an issue contains the same information.

The idea was to convert the content of an issue an generating an embedding for it.

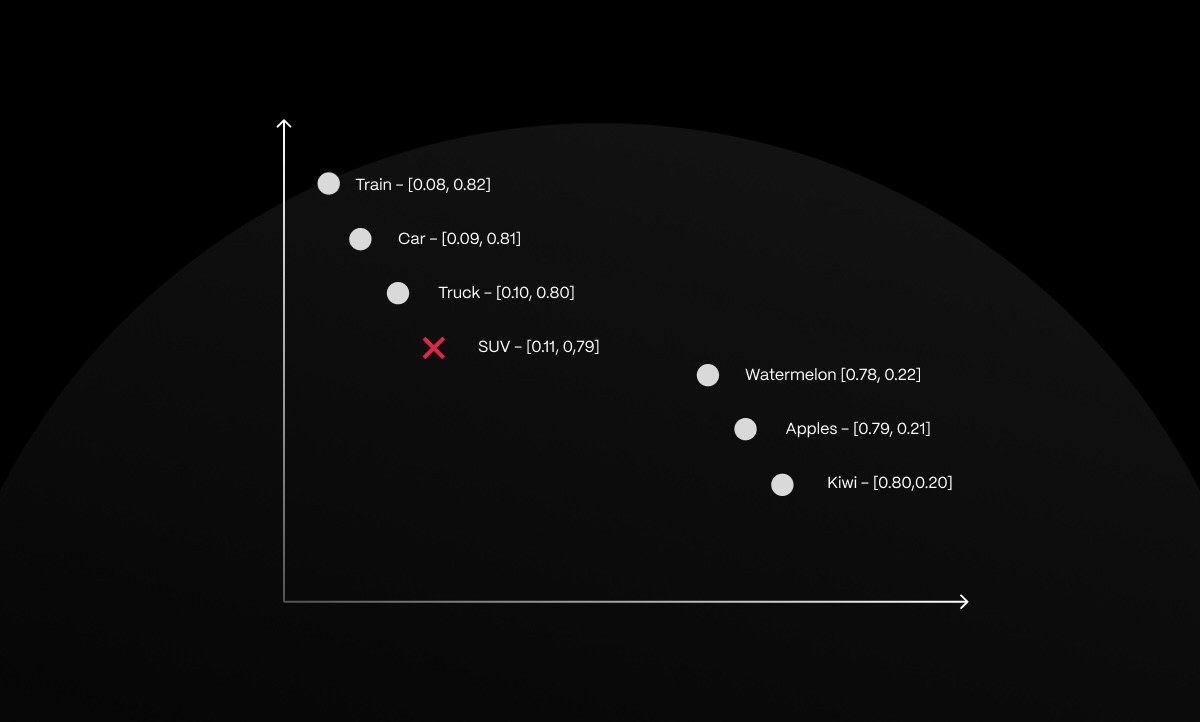

This technique is being called Semantic Similarity.

An embedding is a compressed numerical representation of a piece of text that captures its meaning and is generated by an ML model.

How we did it?

Note: The code is available here.

First we installed pgvector, a new postgres extension in order to store those records in our database:

$ brew install pgvector

CREATE EXTENSION vector;

Then we added a new column to persist those records in the issue table:

ALTER TABLE issues ADD COLUMN embedding vector(768);

And a new index to speed up the queries:

CREATE INDEX ON issues USING hnsw (embedding vector_l2_ops);

Finally we ran a script to import all existing issues for a given team and generating embedding for it.

To determine how similar an issue is with another one we leveraged the neighboor gem to measure the euclidean distance between those vectors:

nearest_issue = issue.nearest_neighbors(:embedding, distance: "euclidean").first

nearest_issue.neighbor_distance

=> 0.6025755637811935

This gives us a great approximation of how similar an issue with the rest of them and we need to adjust the distance to determine good threshold with our entire dataset.

For our use case, a distance of 0.3 gave us a good approximation of similar issues.